Voiceprints and their properties

Signal processing

Physically, sounds are changes in the air pressure propagated as waves. A microphone captures the sound and converts it to an electrical signal which then is measured at discrete timesteps using an analog-to-digital converter. Telephony signals are typically measured 8000 times per second. The obtained series of datapoints is the raw digital signal which is the starting point for the voiceprint extraction. The signal contains information related to the speaker such as the shape of the speaker’s vocal tract which is the information we want to extract. However, the signal also contains information related to factors not carrying information related to speaker recognition such as the words, the type of telephone, background noise and the emotional state of the speaker. This information we want to discard or at least suppress in the final voiceprint.

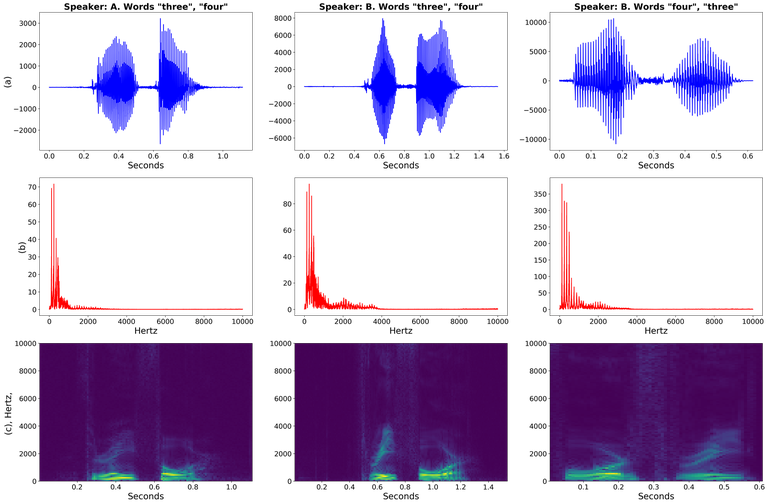

The raw digital signal is not easy to interpret (see Figure 1a) so before presenting it to a machine learning algorithm, signal processing techniques are often used to create a better representation of the signal. At the heart of signal processing is the Fourier transform, a mathematical operation that converts a signal from its time representation to its frequency representation. That is, instead of being represented by its values at different times, the signal will be represented by its values at different frequencies. This representation is, however, not ideal for signals that vary significantly over time. For example, in Figure 1b we can see that the frequency content of a speech signal is not significantly changed if the order of two words is changed (all subfigures in the second row of the figure looks very similar). This means that sequential information is almost completely hidden in this representation. For speech signals it is therefore preferable to divide the signal into segments for which the frequency content is reasonably stationary (typically 0.01s is used) and apply the Fourier transform there. This is shown in Figure 1c. With this representation, both the time and frequency information is well structured.

After a few additional signal processing tricks one obtains a vector, i.e., a collection of numbers (of size 64 in the case of the ROXANNE platform) for each 0.01s of the signal which will be the input to the voice print extraction module. This step is common for automatic speech recognition (converting speech to text) and speaker recognition (recognizing speakers in audio) and the obtained vectors are referred to as feature vectors.

Figure 1: Three speech signals represented in three different ways. a: the signal as a function of time. b: the signal as a function of frequency. c: The mixed representation. Here the vertical axes denotes the frequency, the horizontal axes denotes the time and the intensity of the color denotes the strength of the corresponding time-frequency bin.

Neural network

From the signal processing stage we have obtained 100 feature vectors per second that represents the frequency content of the signal during the corresponding 0.01s time intervals.

Each feature vector is then processed by a deep neural network (DNN) to produce a new modified vector. Deep neural networks are very flexible models that in principle can approximate any mathematical function with arbitrary accuracy. When processing a feature vector, the DNN takes as input not only that particular feature vector but also some of the neighbouring feature vectors. This allows the DNN to take into account the surrounding speech which is beneficial since speech sounds are affected by their context. After this stage, there is still 100 vectors per second although now they are a bit bigger than before (256 in the system currently used in the ROXANNE platform).

The sequence of vectors is “pooled” into one vector which has fixed dimension regardless of the duration of the utterance. This is typically achieved by taking the mean and/or the standard deviation over the sequence vectors. The fixed dimension vectors are processed further with a second DNN. The voiceprint can be any intermediate output in this DNN which are also fixed size vectors.

Estimation of model parameters (training)

The DNN voiceprint extractor has several tens of millions parameter. In order for the voiceprints to be useful, the parameters need to be estimated in such a way that the voiceprints contain as much as possible of the speaker related information in the speech and ideally as little as possible of other information such as what words were spoken or what is the emotional state of the speaker. To this end we need a training set which is a large collection of speech data from which the parameters will be estimated. Typically, there are 5’000-15’000 speakers, each having many recordings, in the training set.

A common and efficient way to optimize the model parameters (used also for the model in the ROXANNE platform) is, given a recording, to classify who among the speakers in the training set speaks in the recording based on the voiceprint. That is, the final output of the above DNN is a set of probabilities, one per speaker in the training set. The parameters of the embedding extractor is optimized jointly with the objective to make the probability of the correct speaker as high as possible. This training objective leads to voiceprints that contain information that is useful for predicting the speakers in the training set. Since there are so many speakers in the training set, the obtained extractor will produce accurate voiceprints also for speakers that were not in the training set.

Properties of voiceprints

The obtained speaker embeddings naturally must contain information about the speaker identity in order for speaker recognition to work. However, they also contain other information such as gender, language/dialect, spoken words/phonemes etc. that is present in the original signal. Some of this information (e.g., words/phonemes) is undesirable because it makes the speaker comparison harder. For example, if word information is present in the voiceprints, then the voiceprints of two different speakers uttering the same word could become similar enough for the system to think that the speaker is the same. The design of the voiceprint extractor alleviates this mainly in two ways. First, the pooling mechanism discussed earlier means that all information in the utterance will be mixed into the voiceprint so as long as the utterance is long enough, the effect of individual words will even out. Second, the parameters are optimized for classifying the training speakers and this task requires the voiceprint to contain mainly speaker related information. A few studies have however found that it is possible to infer from the embedding which words were spoken if the number of possible words is small [4,5]. Other studies have on the other hand shown that special techniques for estimating the model parameters, can further suppress information not related to the speaker such as phonetic content and gender, that can be suppressed in the voiceprint without reducing speaker recognition performance [6,7].

Summary

A voiceprint is a fixed size vector (i.e., a collection of numbers) that is extracted from audio by means of signal processing and machine learning techniques. By comparing how similar the voiceprints from two recordings are, we can estimate how likely it is that the two corresponding audio files are from the same speaker. This is an important feature of the ROXANNE platform since the identities of speakers in recordings from criminal investigations are usually not known. For example, if criminal investigators intercept a phone number, voiceprints can be used to verify whether the speaker in the intercepted phone change from call to call. It can also be used to detect whether the conversation partner in some of the different calls is the same person.

[1] N. Dehak, P. J. Kenny, R. Dehak, P. Dumouchel and P. Ouellet, "Front-End Factor Analysis for Speaker Verification," in IEEE Transactions on Audio, Speech, and Language Processing, vol. 19, no. 4, pp. 788-798, May 2011, doi: 10.1109/TASL.2010.2064307.

[2] Ehsan Variani, Xin Lei, Erik McDermott, Ignacio Lopez Moreno, Javier Gonzalez-Dominguez, “Deep neural networks for small footprint text-dependent speaker verification” in Proceedings of IEEE International Conference on Acoustic, Speech and Signal Processing (ICASSP), 2014

[3] D. Snyder, D. Garcia-Romero, D. Povey, and S. Khudan- pur, “Deep neural network embeddings for text-independent speaker verification,” in Proceedings. Interspeech, pp. 999–1003, 2017.

[4] Shuai Wang, Yanmin Qian, and Kai Yu, “What does the speaker embedding encode?,” in Interspeech, 2017, pp. 1497–1501.

[5] Desh Raj, David Snyder, Daniel Povey, Sanjeev Khudanpur, “Probing the information encoded in x-vectors”, IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), 2019

[6] Shuai Wang, Johan Rohdin, Lukáš Burget, Oldřich Plchot, Yanmin Qian, Kai Yu, Jan “Honza” Cernocký, On the Usage of Phonetic Information for Text-independent Speaker Embedding Extraction, in Proceedings of Interspeech, pp 1148-1152, 2019

[7] “Paul-Gauthier Noé, Mohammad Mohammadamini, Driss Matrouf, Titouan Parcollet, Andreas Nautsch, Jean-François Bonastre”, Adversarial Disentanglement of Speaker Representation for Attribute-Driven Privacy Preservation, in Proceedings of Interspeech, pp. 1902-1906, 2021