ROXANNE Technology updates – The Autocrime platform

The Autocrime platform was constantly updated during the last six months integrating additional features and taking into account feedback from LEAs based on their experiences. The latest release of the platform is intended to be installed on users’ machines (e.g., laptop) and supports several Operating Systems; namely Linux Ubuntu, MacOS (including M1 chipsets) and Windows (using a Virtual Machine).

Key features that were added or improved recently include the following:

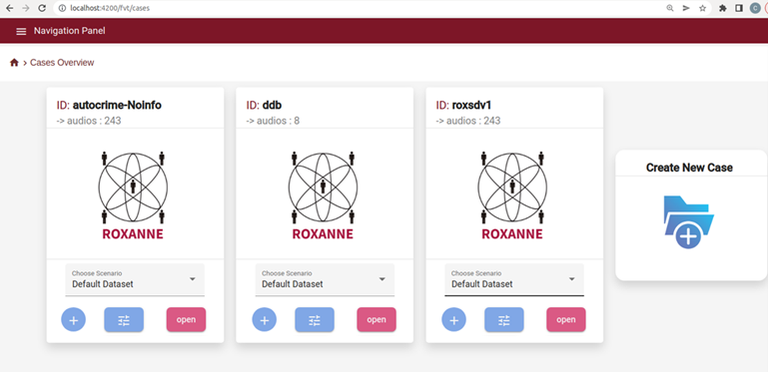

- Ability to create new cases via the Graphical User Interface and upload the files to be processed (see figure below),

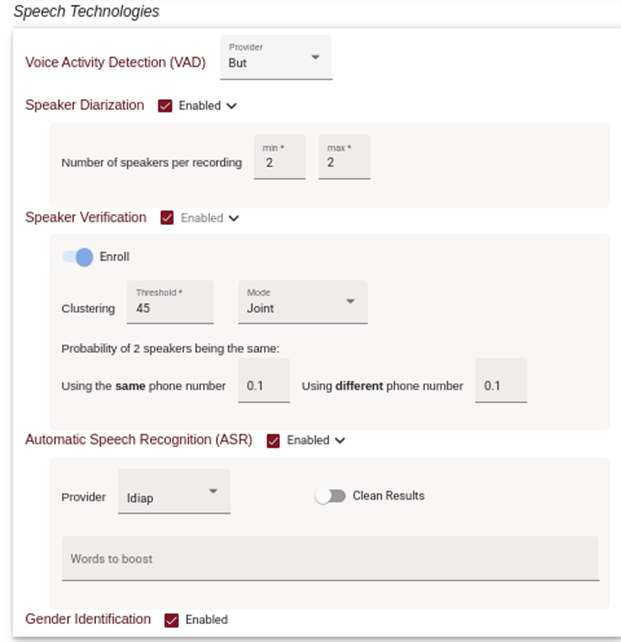

- Ability to choose the technologies to be used when processing a particular case, including support for choosing provider when alternative implementations exist and configuring the parameters of these technologies (see figure below the options for speech technologies),

- Ability to diarize both stereo and mono audio files; the latter containing either multiple speakers (i.e., to cater for telecommunication service providers that provide mono files for the wiretapped calls) or single ones (i.e., for allowing the enrollment of speaker information),

- Ability to perform open-set speaker identification (i.e., some or all speakers are unknown) by considering additional information (e.g., from call-detail records),

- Ability to transcribe speech to text for English while considering any keywords provided by the user,

- Ability to identify Named Entities such as locations, dates and persons, as well as to include the latter as part of the network topology

- Ability to identify the most-likely topic of the conversation based on a set of topics provided by the user,

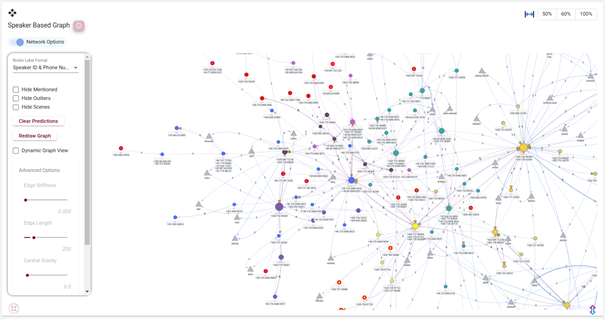

- Ability to visualize the results of Social Network Analysis (i.e., Community detection, Social Influence analysis, Outlier detection) and predict links between speakers, which even though these were not evidenced are likely to be present (see figure below),

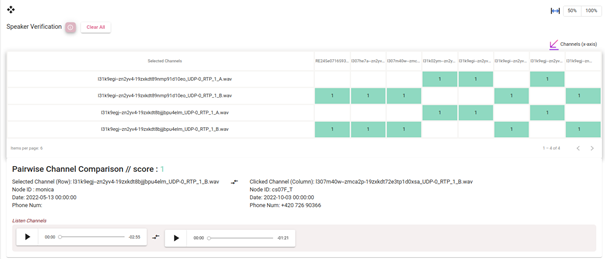

- Ability to compare the similarity of the voiceprints of selected conversations with the voiceprints generated for the rest conversations to identify cases where the user should check the outputs of speaker identification/verification/clustering (see figure below),

- Ability to suggest changes to the speaker clustering by merging nodes or associating calls to different persons and make sure that these changes are taken into account when processing the same case in the future,

- Ability to save the changes above as a new scenario to test/validate users’ hypotheses,

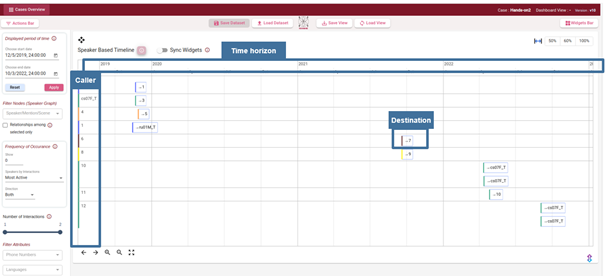

- Ability to analyze the Autocrime results along several dimensions (i.e., speaker, phone number and temporal (see figure below),

- Ability to filter the results using a wide range of filters, e.g., by community, activity of speakers, by phone number used, by conversation topic etc.,

- Ability to save and load different dashboard configurations.

Furthermore, the partners are working towards integrating additional features, such as the ability to add further relationships among the speakers based on the voices heard, faces detected, and scenes identified when video files are being processed. We hope the final tool will be of much help for LEAs and will contribute to making the world a safer place.