Does Off-the-Shelf Named Entity Recognition (NER) Techniques work in the context of Criminal Investigation?

In the ROXANNE (stands for real-time network, text, and speaker analytics for combating organized crime) project, that is focused on researching new technologies for law enforcement investigations of organised crime, our work related to NER aims to transcribe the content of telephone conversations between (fictitious) ‘suspects’ and other external speakers using automatic speech recognition and automatically identifying persons (names), places, and times using NER techniques that might help investigators to semi-automate large criminal investigations.

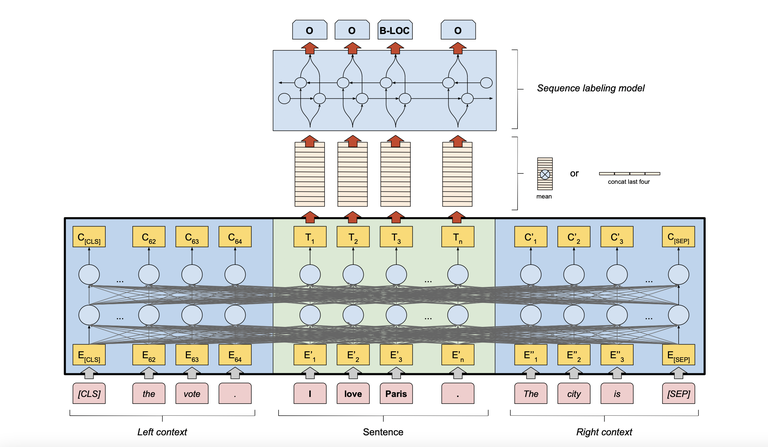

In our initial investigations, we use the off-the-shelve model FLERT[1] which fine-tunes the transformer itself on the NER task and only adds a linear layer for word-level predictions. As we present in Figure 1.

Figure 1: To obtain document-level features for a sentence that we wish to tag (“I love Paris”, shaded green), we add 64 tokens of left and right tokens each (shaded blue). As self-attention is calculated over all input tokens, the representations for the sentence’s tokens are influenced by the left and right context.

We further fine-tune our model on Onto-Note 5 dataset which adds a single linear layer to a transformer and fine-tunes the entire architecture on the NER task. To bridge the difference between subtoken modeling and token-level predictions, the architecture applies subword pooling to create token-level representations which are then passed to the final linear layer. A common subword pooling strategy is “first” [2] which uses the representation of the first sub token for the entire token. To train this architecture, prior works typically use the AdamW [3] optimizer, a very small learning rate, and a small, fixed number of epochs as a hard-coded stopping criterion [4]. We adopt a one-cycle training strategy [5], inspired by the HuggingFace transformers [6] implementation, in which the learning rate linearly decreases until it reaches 0 by the end of the training. The results exhibit a performance of, 78% F-1 Score which is a harmonic means of precision and recall.

As we described earlier in our article, there has been no significant work to recognize these named entities in the context of automatically generated transcripts of telephone calls by using automatic speech recognition (ASR). We make a first attempt, by using the Time Delay Neural Network Factorization model which is reasonably small compared to the end-to-end models[7] and is based on Kaldi ASR toolkit (https://github.com/kaldi-asr/kaldi). We ran NER on top of these generated outputs of ASR and achieved a F1 of 20 %. The scores are relatively low, for instance, in the ground truth transcript of a call a person mentions “I want to send it from brno to prague”, there are two locations brno and prague while when we transcribe this utterance using our ASR model it turns out as, “i went to finish from birth to rock”, which misses both the locations in the generated output.

As we just saw in our example we are missing the entities in our ASR transcribed calls, motivated from research [8, 9], we perform boosting separately for known words (words existing in the lexicon) and for the words from outside the lexicon (Out Of Vocabulary boosting). The in-lexicon words are boosted with the lattice re-scoring and G boosting techniques [9]- with the two techniques the new decoding graph is created. The Out Of Vocabulary words (OOVs) are first added to the lexicon, then to the language model and the grammar model before the decoding graph is created. It significantly improved the F-1 to 23.2 %.

To advance our experiments or to minimize this gap of performance of NER on ground truth data and on ASR, we experiment with a lattice-based approach which is a representation of the alternative word-sequences that are "sufficiently likely" for a particular utterance as suggested in this paper [10]. The lattice generation algorithm ensures that the word-sequence is present in the lattice and has the best possible alignment and weight. Also, each word-sequence must be present in the lattice only once. It is achieved by first creating a state-level lattice [11] and appropriately pruning it, and then determinizing it using a special determinization algorithm that picks the most likely alternative path. For lattices written to disk, each word-sequence has only one path through the lattice. As the lattice-based approach has proven to be efficient, we experimented with 10-best ASR outputs generated from the lattice and finally running NER on top of it with 24 % F-1 score. We remove the duplicates by simply taking a union of all the named entities. However, when we choose the best ASR (based on Levenshtein distance), we achieve a significant improvement of 26.8% F-1. The results of this work are in progress, and we need to significantly reduce the gap between NER performed on manual transcripts and automatically generated transcripts for telephonic conversation. With this on-going research advancement community would be able to fetch persons, locations and time from the transcripted audio telephonic calls.

References

[1] Schweter, Stefan, and Alan Akbik. "Flert: Document-level features for named entity recognition." CoRR (2020).

[2]Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova:

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. NAACL-HLT (1) 2019: 4171-4186

[3] Zhenxun Zhuang, Mingrui Liu, Ashok Cutkosky, Francesco Orabona:

Understanding AdamW through Proximal Methods and Scale-Freeness. CoRR abs/2202.00089 (2022)

[4]Conneau, Alexis, and Guillaume Lample. "Cross-lingual language model pretraining." Advances in neural information processing systems 32 (2019).

[5]Smith, Leslie N., and Nicholay Topin. "Super-convergence: Very fast training of residual networks using large learning rates." (2018).

[6] Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, Anthony Moi, Pierric Cistac, Tim Rault, Rémi Louf, Morgan Funtowicz, Jamie Brew:

HuggingFace's Transformers: State-of-the-art Natural Language Processing. CoRR abs/1910.03771 (2019)

[7] Khonglah, B., Madikeri, S., Dey, S., Bourlard, H., Motlicek, P., & Billa, J. (2020, May). Incremental semi-supervised learning for multi-genre speech recognition. In ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 7419-7423). IEEE.

[8] Braun, R. A., Madikeri, S., & Motlicek, P. (2021, June). A comparison of methods for oov-word recognition on a new public dataset. In ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 5979-5983). IEEE.

[9] Nigmatulina, I., Braun, R., Zuluaga-Gomez, J., & Motlicek, P. (2021). Improving callsign recognition with air-surveillance data in air-traffic communication. arXiv preprint arXiv:2108.12156.

[10] Juan Zuluaga-Gomez, Iuliia Nigmatulina, Amrutha Prasad, Petr Motlícek, Karel Veselý, Martin Kocour, Igor Szöke:

Contextual Semi-Supervised Learning: An Approach to Leverage Air-Surveillance andUntranscribed ATC Data in ASR Systems. Interspeech 2021: 3296-3300

[11] Michel, W., Schlüter, R., Ney, H. (2019) Comparison of Lattice-Free and Lattice-Based Sequence Discriminative Training Criteria for LVCSR. Proc. Interspeech 2019, 1601-1605, doi: 10.21437/Interspeech.2019-2254